Subsection Selection Strategies

This is great! We have a scalable way to approximate areas, and it seems like we can pretty easily increase the precision of our approximations by increasing

\(n\text{,}\) the number of slices/rectangles that we use. And the great thing about this is that when we do increase

\(n\text{,}\) we don’t increase the complexity of our calculations!

Sure, it would be tedious to calculate and add 100 areas of rectangles by hand, but those area calculations don’t get more difficult: there are just more of them.

The only real downside is that when we increase the number of slices/rectangles, we are really increasing the number of decisions that we have to make: we have to choose an

\(x_k^*\) for each subinterval, and so while it isn’t hard to just calculate a bunch of areas and add them up, it is difficult, on a human level, to make a bunch of decisions about which

\(x\)-value to choose from each subinterval. But this decision isn’t even that important!

We use the “star” notation on the

\(x_k^*\) to represent the fact that it really doesn’t matter which

\(x\)-value gets chosen from the subinterval: as long as we pick one, we get an approximation! And when

\(n\) increases, it matters less and less what the actual

\(x\)-value is: as long as our function

\(f(x)\) is continuous, then there will be not much variation among the

\(y\)-value outputs for any

\(x\)-values in each (small) interval!

All of this to say: let’s make a single decision about picking

\(n\) \(x\)-values from

\(n\) subintervals instead of having to make

\(n\) decisions (one for each

\(x\)-value).

Left, Right, and Midpoint Riemann Sums.

When we build a Riemann sum, we can make a choice to systematically choose the values for \(x_k^*\) (for \(k=1,2,..., n\)). There are many ways of doing this, but here are three:

-

Left Riemann Sum: We pick the left-most

\(x\)-value from each subinterval. That is, if the partition is

\(\{a=x_0, x_1, x_2, ..., b=x_n\}\text{,}\) then we choose

\(\{a, x_1, x_2, ..., x_{n-1}\}\) as our

\(x\)-values to evaluate

\(f\) at for the rectangle heights.

We refer to these as

\(L_n\text{,}\) a Left Riemann sum with

\(n\) rectangles.

-

Right Riemann Sum: We pick the right-most

\(x\)-value from each subinterval. That is, if the partition is

\(\{a=x_0, x_1, x_2, ..., b=x_n\}\text{,}\) then we choose

\(\{x_1, x_2, ..., b\}\) as our

\(x\)-values to evaluate

\(f\) at for the rectangle heights.

We refer to these as

\(R_n\text{,}\) a Right Riemann sum with

\(n\) rectangles.

-

Midpoint Riemann Sum: We pick the

\(x\)-value that is in the middle of each subinterval. That is, if the partition is

\(\{a=x_0, x_1, x_2, ..., b=x_n\}\text{,}\) then we choose

\(\left\{\frac{a+x_1}{2}, \frac{x_1+x_2}{2}, ..., \frac{x_{n-1}+b}{2}\right\}\) as our

\(x\)-values to evaluate

\(f\) at for the rectangle heights.

We refer to these as

\(M_n\text{,}\) a Midpoint Riemann sum with

\(n\) rectangles.

None of this is a requirement for a Riemann sum, but we will consistently find that when we limit the number of decisions that we have to make, the complexity of the calculation decreases.

Notice that we’ve already made a similar choice with how we calculate

\(\Delta x\text{:}\) it is not required that each rectangle have the same width, but it is very nice to not have to think about

\(n\) different widths!

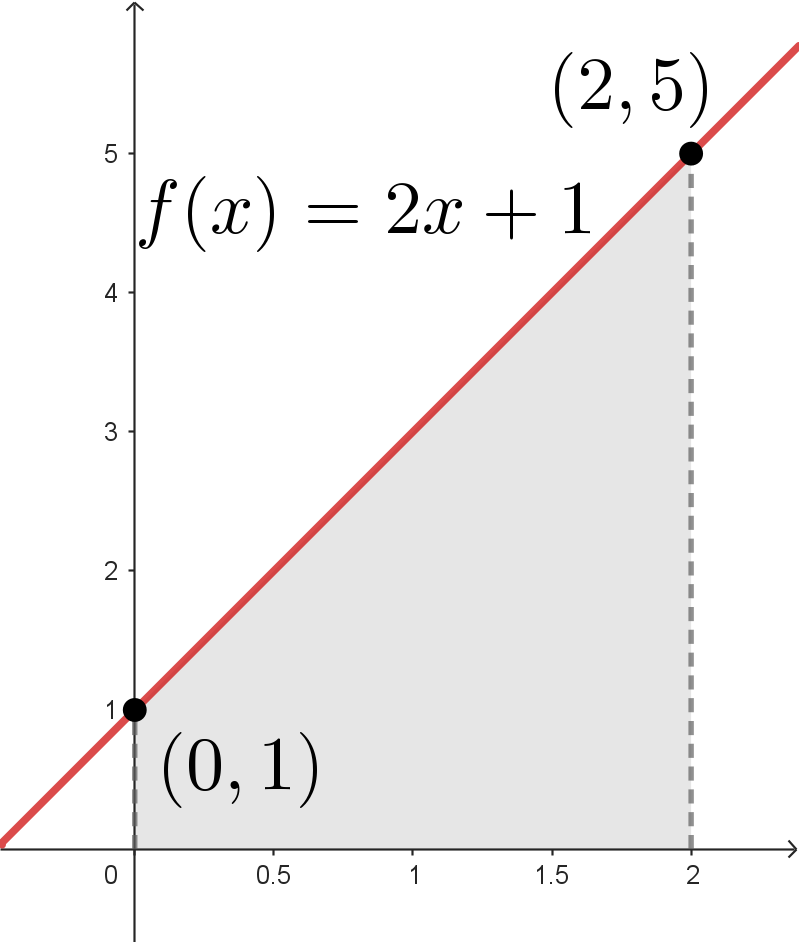

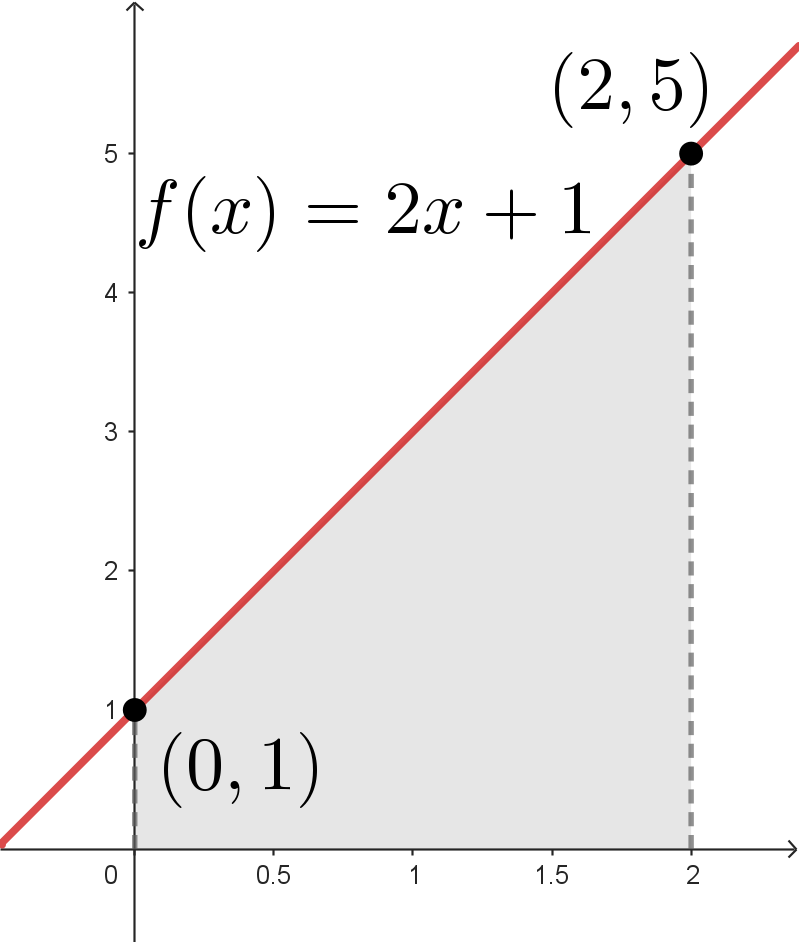

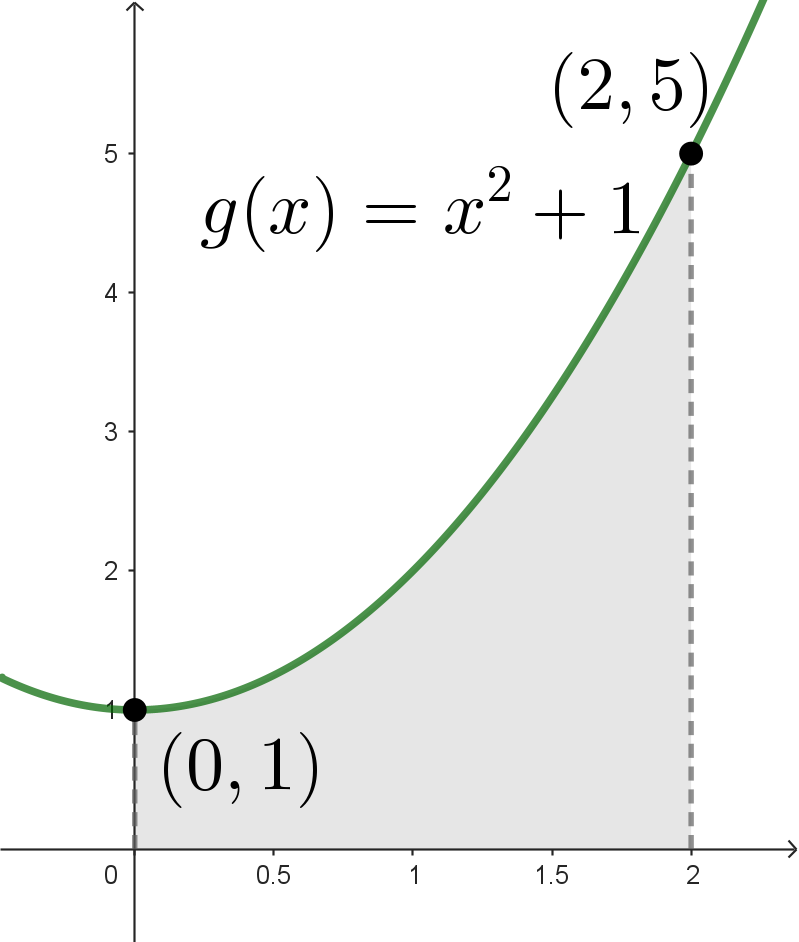

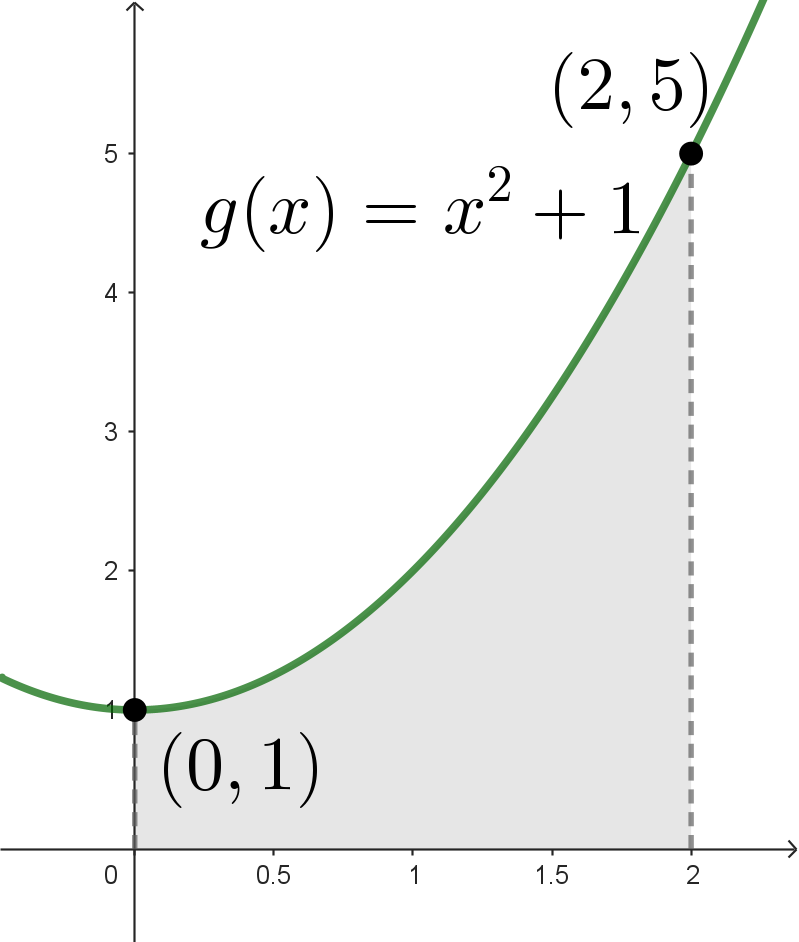

Lastly, we’ll finish with a nice interactive Riemann sum calculator. Feel free to explore some different graphs and see how the Riemann sums work when we change how we select the values for

\(x_k^*\) as well as when we change the number of rectangles,

\(n\text{.}\)